In today’s world, data has become a vital part of every organization. The amount of data being generated and collected is increasing at an unprecedented rate, and this led to emergence of a new concept called data pipeline. In this article, we will explore what is data pipeline, how it works, and how to implement it effectively.

What is a Data Pipeline?

A data pipeline is a set of processes that enables the collection, transformation, and analysis of large volumes of data from multiple sources. The data pipeline ensures that data is available in a usable format for the end-users, such as analysts, data scientists, or business leaders. The data pipeline is typically automated and works in real-time to ensure that the data is available when it is needed.

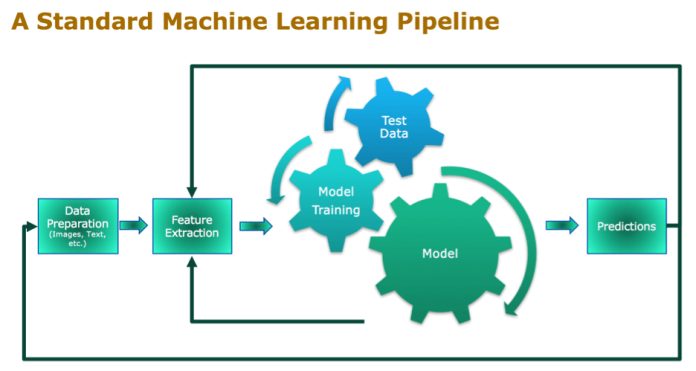

How does Data Pipeline work?

A data pipeline gathers data from several sources, converts it into a usable format, and stores it in a centralised location. This procedure consists of numerous processes, including data input, processing, and storing.

- Data Ingestion: Data ingestion is the starting point in a data pipeline. It is when data is acquired from multiple sources such as databases, online applications, or IoT devices. Depending on the organisation’s needs, data can be gathered in real-time or in batches. After collecting the data, it is kept in a raw data archive, similar to a data warehouse.

- Data Processing: The next step is data processing, where the raw data is transformed into a usable format for analysis. Data processing involves several steps, such as cleaning, filtering, and aggregating data. Data processing also involves enriching the data by combining it with other data sources to gain more insights.

- Data Storage: The final step is data storage, where the processed data is stored in a centralized location, such as a data warehouse. The data is stored in a way that makes it easy to retrieve and analyze by end-users. This centralized storage ensures that the data is consistent and accurate, which is essential for making informed business decisions.

How to Implement Data Pipeline Effectively?

Implementing a data pipeline effectively requires careful planning and consideration of various factors. Below are some best practices for implementing a data pipeline effectively.

- Define Data Requirements: The first step in implementing a data pipeline is defining the data requirements. This involves identifying the data sources, the frequency of data collection, and the type of data to be collected. Defining data requirements is essential to ensure that the pipeline is designed to collect the necessary data.

- Choose the Right Technology: Choosing the right technology for the data pipeline is critical to its success. There are many technologies available for data pipelines, such as Apache Kafka, Apache Storm, and Apache Spark. Each technology has its strengths and weaknesses, and the choice of technology depends on the specific requirements of the organization.

- Design the Pipeline Architecture: The pipeline architecture defines the flow of data from the source to the destination. The pipeline architecture should be designed to handle the data volume, velocity, and variety. The pipeline architecture should also be scalable and flexible to accommodate future data requirements.

- Monitor and Debug the Pipeline: Monitoring and debugging the pipeline is essential to ensure that it is working correctly. The pipeline should be monitored for errors, performance issues, and data quality issues. The monitoring and debugging process should be automated as much as possible to ensure that issues are identified and resolved quickly.

- Implement Security and Compliance: Implementing security and compliance measures is critical to protect the data from unauthorized access and comply with regulations such as GDPR, HIPAA, and PCI DSS. The pipeline should be designed to ensure that the data is encrypted during transit and at rest. Access to the data should also be restricted based on the user’s role and level of authorization.

- Test and Validate the Pipeline: Testing and validating the pipeline is essential to ensure that it is working as expected. The pipeline should be tested for performance, scalability, and reliability. The data should also be validated to ensure that it is accurate and consistent.

Wrapping up

In conclusion, a data pipeline is a crucial component of any organization that deals with large volumes of data. Implementing a data pipeline effectively requires careful planning, consideration of various factors, and the right technology. By following the best practices outlined above, organizations can design and implement a data pipeline that meets their data requirements, is scalable, and provides high-quality data for informed decision-making.